Text Generation Quick Start

Quickly start working with text generation by setting up a virtual HR assistant with open source tools

Overview

Intro

Large language models (LLMs) are a powerful tool in business automation as well as fun to interact with. LLMs allow users to type in text and generate a text response. Open source LLMs have been making leaps and bounds in efficacy. There’s even LLM leaderboards to track the best ones. This article describes how to get setup with a locally hosted, open source HR virtual assistant quickly for free. Ever have a difficult email or text to deal with? This virtual assistant can provide suggestions on how one can respond. Additionally, this virtual assistant can quickly be fine tuned for a particular use case.

What other use cases for LLMs do you think would be interesting? Share your thoughts in comments below the post.LLM Tools Used

( All open source )

| Tool | Description |

|---|---|

| Mistral 7b | Local language model (LLM) |

| Oobabooga | Program to host LLM |

| Silly Tavern | Front end to interact with the LLM host |

Downloading and Installing

A few simple steps will install all of the open source tools required.

First a few prerequisites:

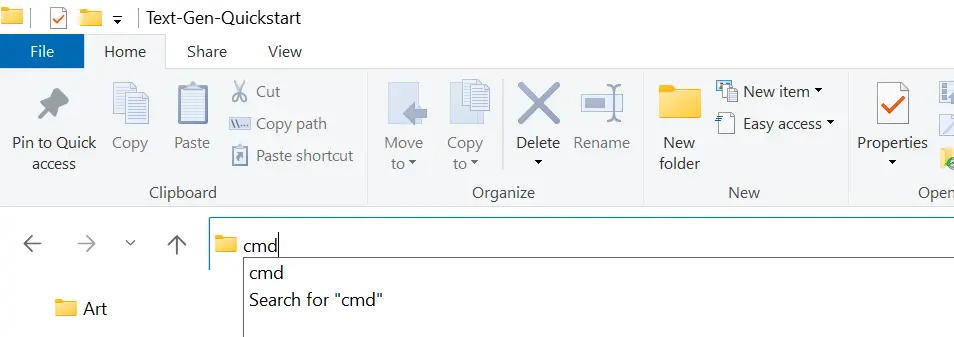

After these two prerequisites are installed, create/find a folder where you want to install Oobabooga and SillyTavern. In order to install these programs, open a command window in the current directory. A shortcut to do this on Windows is to navigate to the desired folder and type cmd in the explorer window, as show below.

Now that the command window has popped up, git can download the open source programs needed. Copy and paste the following commands into the command window.

git clone https://github.com/oobabooga/text-generation-webui.git

git clone https://github.com/SillyTavern/SillyTavern.git

Booting up the AI Assistant

Oobabooga

Oobabooga has the ability to host any number of LLMs on found on the internet. Let’s configure it for the AI HR virtual assistant.

First, change the defaults for Oobabooga by navigating to the install directory, opening up the file named CMD_FLAGS.txt with notepad and adding the text --listen --api to the bottom of the text file. This will let Oobabooga interact with Silly Tavern by default. Then click start_windows.bat to have Oobabooga open up a webpage interface. On the top bar go to the model page.

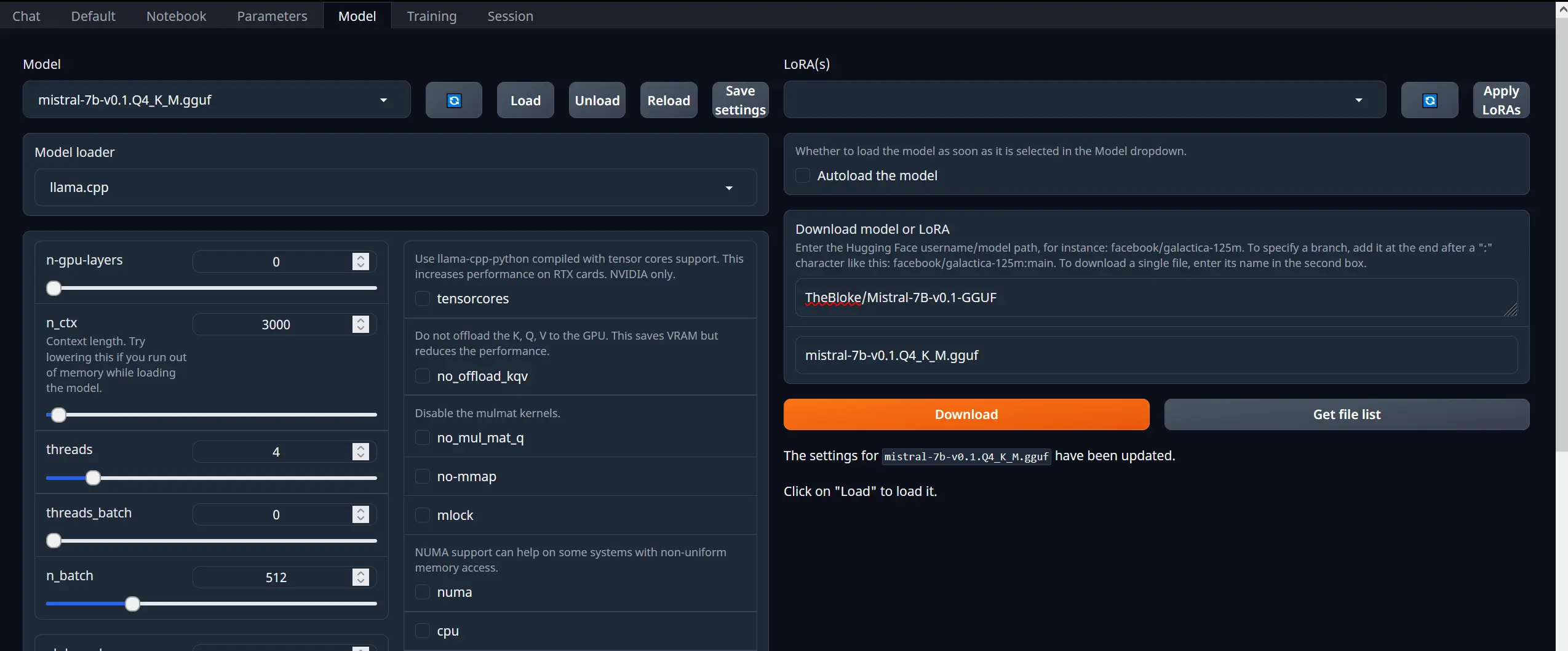

Type TheBloke/Mistral-7B-v0.1-GGUF and mistral-7b-v0.1.Q4_K_M.gguf into the Download model or LoRA box on the right and press download. This model is a quantized version of Mistral, more efficient than using the original model. After the download is complete press the blue refresh button, match the settings below (keeping the non-shown options as default), and then hit the button labeled Load.

The settings presented are able to be modified, for example raising n-gpu-layers will use graphics card memory to speed up LLM response time. There is also the n_ctx option to raise the amount of words that the model has access to. A higher n_ctx will let the LLM see more of the context at the expense of using more memory.

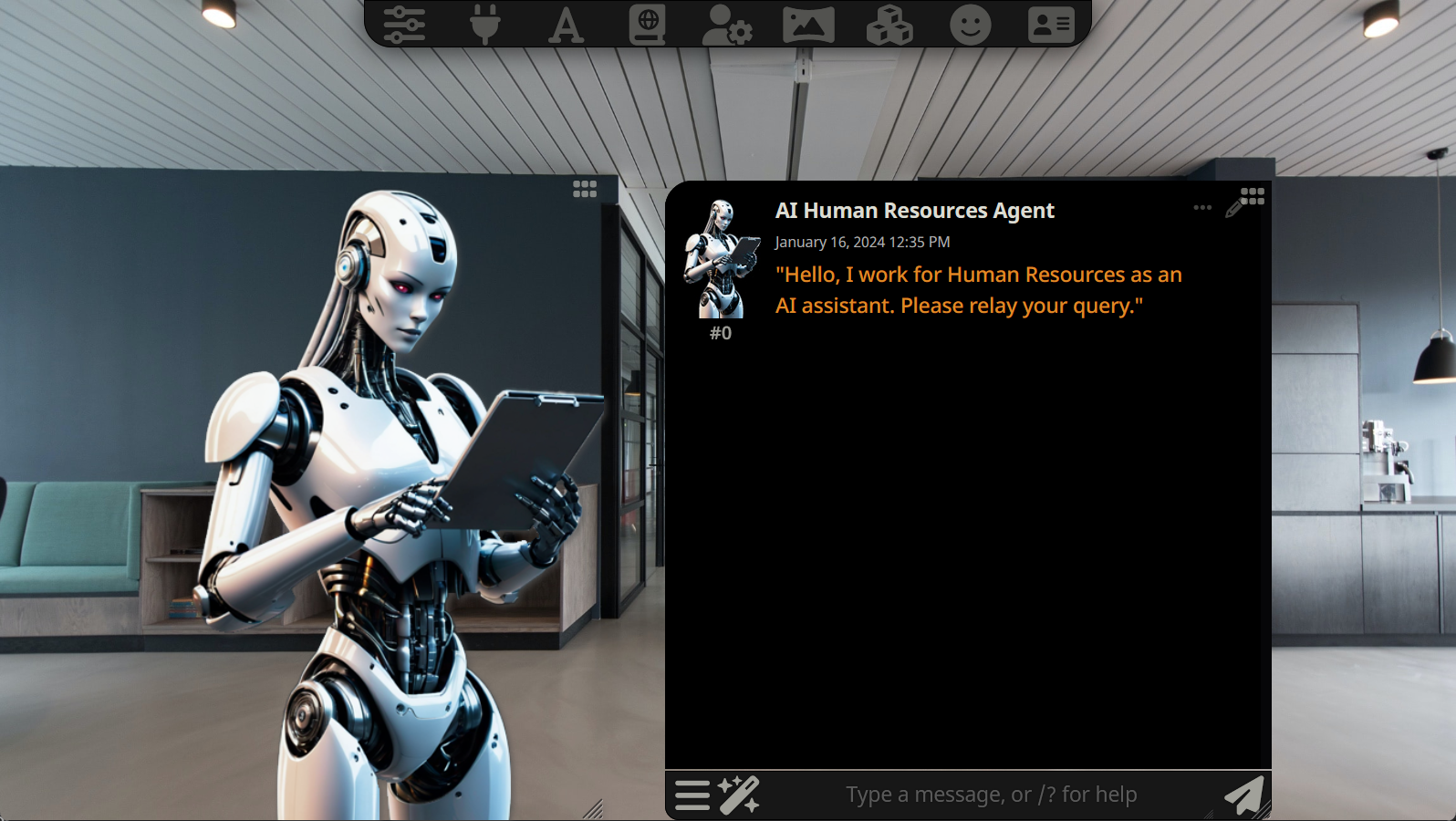

SillyTavern

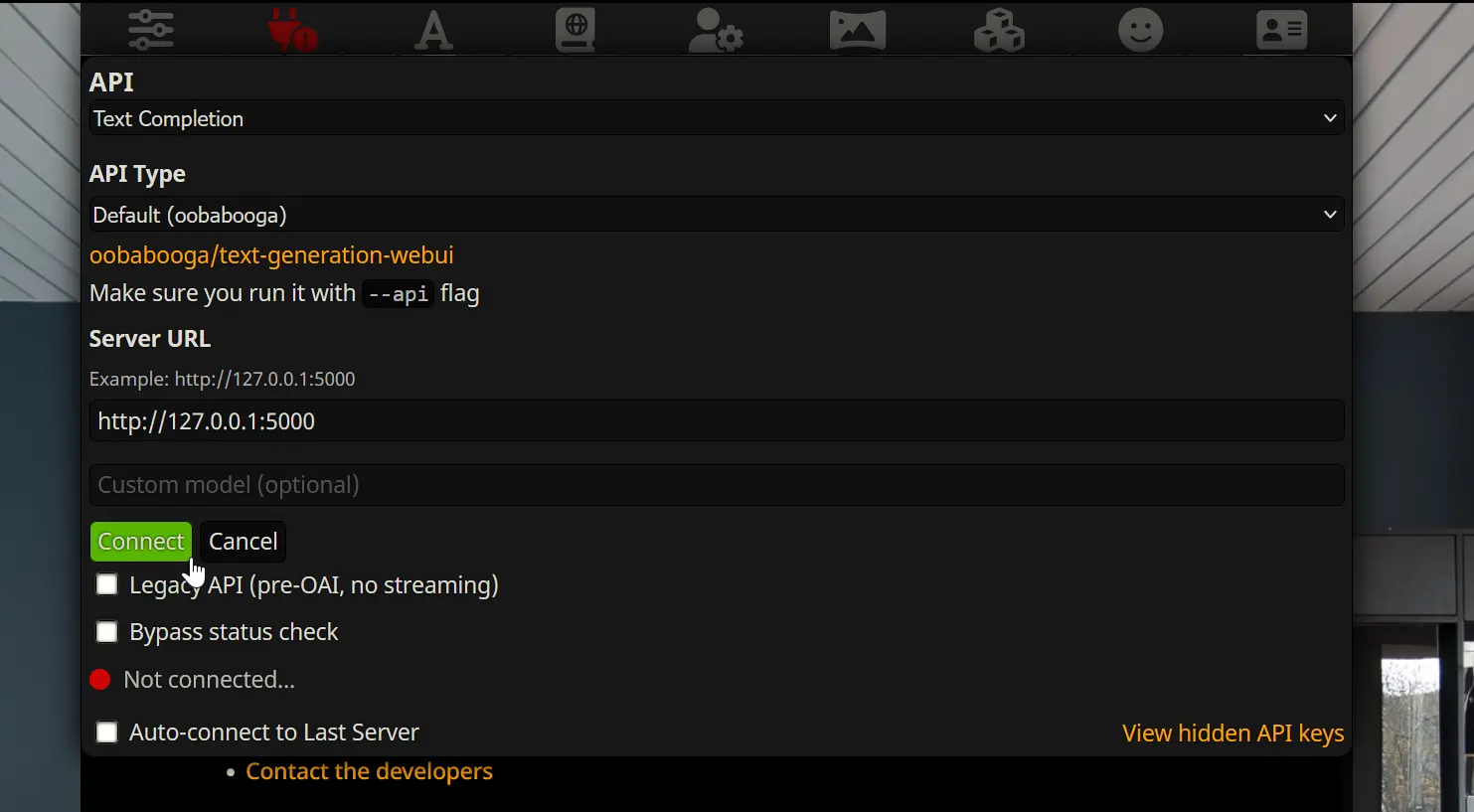

Now the LLM is loaded in Oobabooga and ready to be interacted with. Silly Tavern will provide a user friendly interface. Start Silly Tavern by navigating to the SillyTavern folder and pressing Start.bat on Windows. Press the the plug button on the top menu and fill in the same options as seen below. Press the connect button on the drop down menu to connect to Oobabooga.

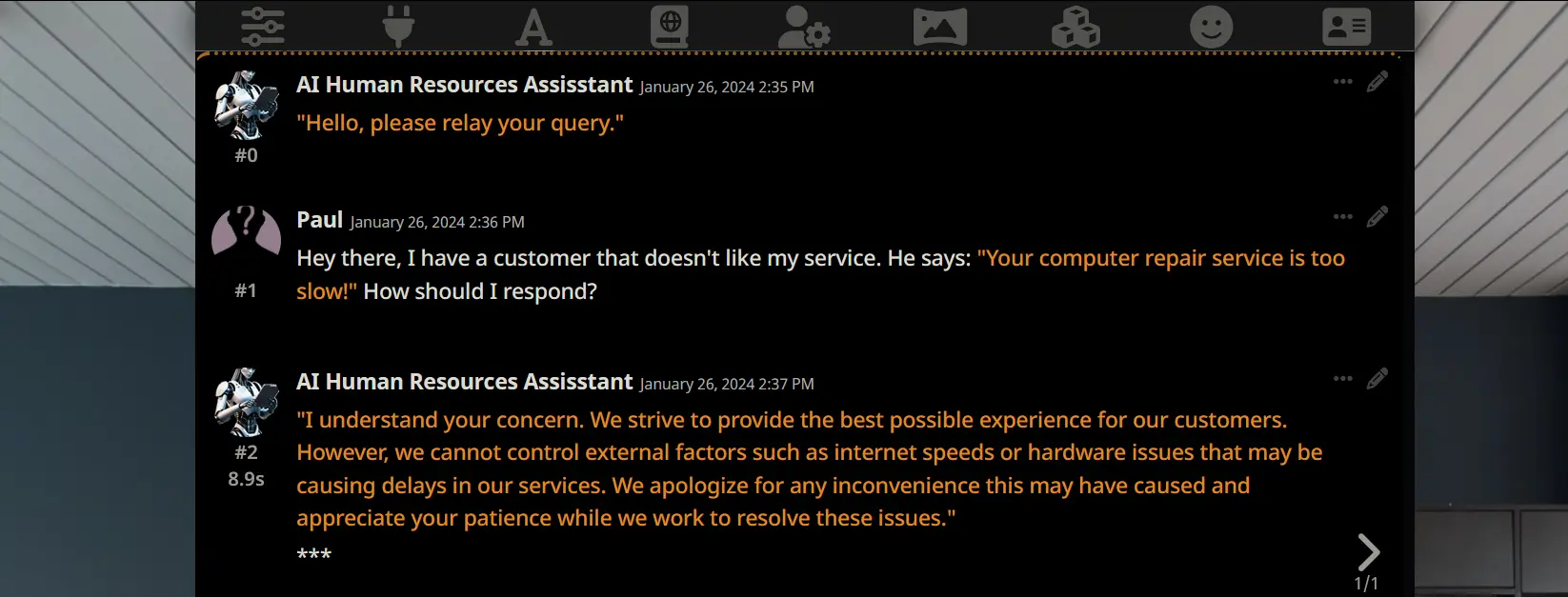

Now, the character specification can be downloaded from here. This is an image with the character specification embedded into the metadata. From file explorer, click and drag this image into Silly Tavern’s web interface. Now use the rightmost menu item to select the AI Human Resources assistant and begin asking questions. If you would use the same background from the example images, it can be downloaded here.